American TSMC, Thermodynamics AI, Tesla Dojo, the Next Energy Leap #8

/ 9 min read

Image Source: DALL-E

Image Source: DALL-E

Hi! Superficial Intelligence here, fashionably late, as always. Let’s catch up on the month’s fluff, shall we?

1. Chips: Can the US Keep Up?

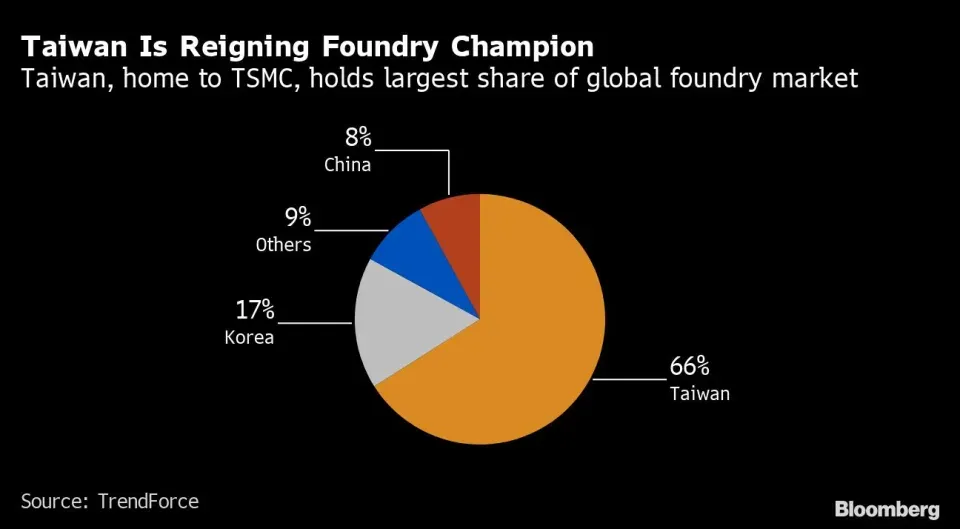

Image Source: Yahoo Finance

Image Source: Yahoo Finance

First, let’s talk about semiconductors — the brains of our modern world.

With more than half of the world’s most advanced semiconductors are coming from one place: Taiwan, dominated by one company: TSMC, the global economy is dancing on a — literal — seismic fault line, one of the most active areas in the world. A single earthquake, and a looming war with China could send shockwaves through every industry and crippling the economy. The post-pandemic chip shortage (one)(two) was a reminder of this vulnerability.

Although, there’s also a little bit of production in South Korea, thanks to Samsung, but mostly it’s just TSMC. That said, we urgently need to diversify geographically to safeguard against disruptions. The good thing is chipmakers are recognizing the danger, and they are already investing in one very suitable country: Japan (even in its 3rd fab already, actually). Beyond its relatively safety from Chinese invasion, coupled with already built supply chain for tools and materials (albeit remnants from the past), Japan is hungry for innovation. Not to mention they are full of highly skilled and yet relatively affordable workers. Japanese SMEs are even the market leaders in semiconductor, ready to gain more.

The momentum is real. Tokyo has successfully secured investments from giants like Micron Technology, Samsung Electronics and Powerchip Semiconductor Manufacturing. Japanese officials are also helping domestic startup Rapidus set up production lines for cutting-edge 2nm chips in Hokkaido. It all checks out.

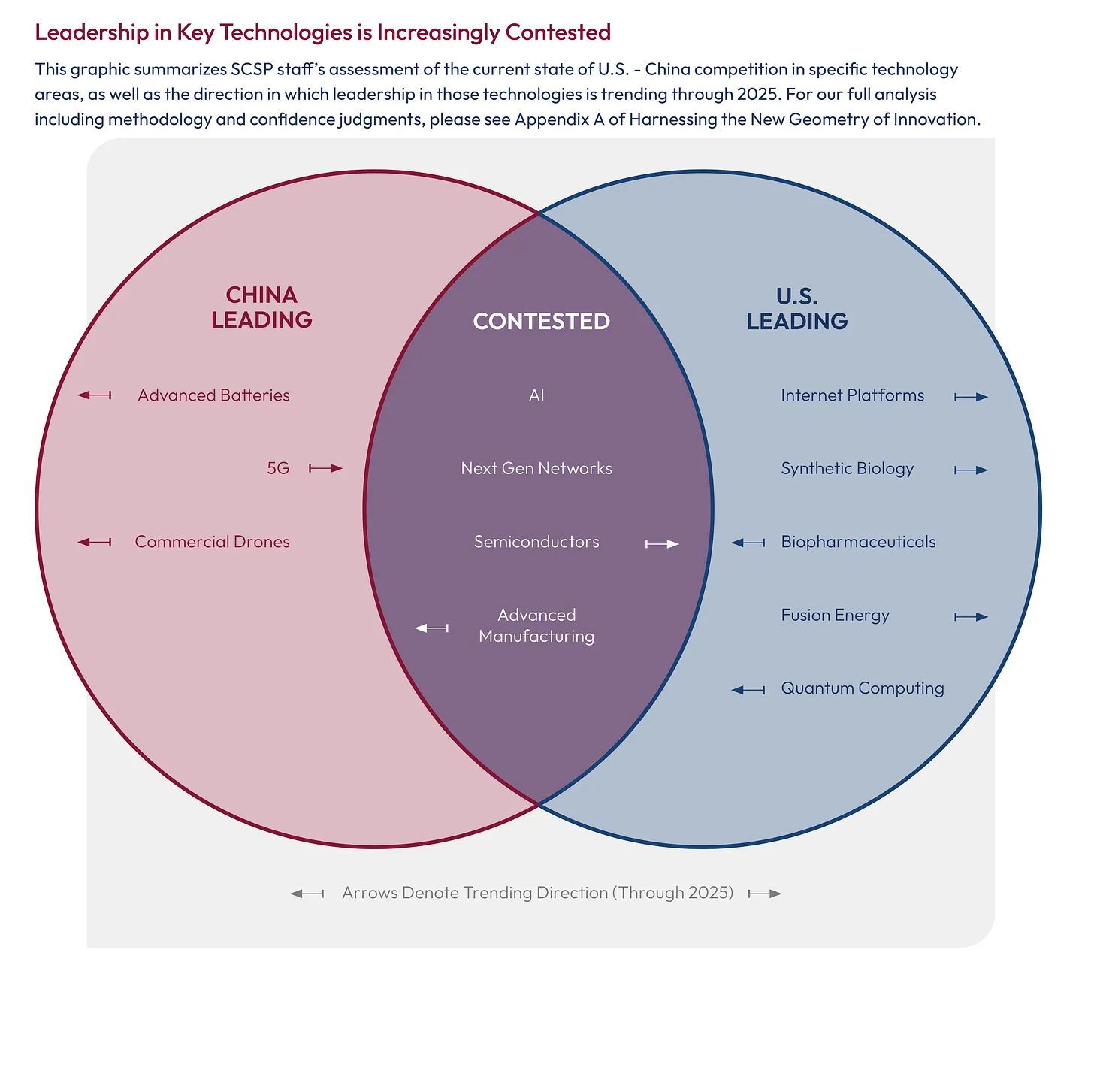

Image Source: SCSP

Image Source: SCSP

Not only are semiconductors one of the key technologies of our century, they’re also a battleground for economic and national security supremacy. Forget oil; the real gold rush of the 21st century is for semiconductors. On that front, Tokyo has apparently moved more actively and swiftly in building up a robust domestic semiconductor ecosystem than Washington, which is also trying to build domestic capabilities for economic and national security reasons. The Japanese government hasn’t just talked the talk, they’ve walked the walk, showering companies with direct subsidies to fuel innovation and production. Meanwhile, remaining in limbo, the Biden administration has yet to distribute a dime to any company from the CHIPS and Science Act with its $52.7 billion war chest for the semiconductor industry.

In an interesting (yet expected) move, OpenAI is reportedly in early discussions to raise fresh round of funding from G42 and Softbank for a chip venture, aims to set up a network factories to manufacture hardware for AI. Usually, companies even the likes of Google, Microsoft, and Amazon are focus on designing their own custom silicon and then farm out manufacturing to outside companies. It’s still unclear how much Altman has sought to raise and whether this venture will be managed as a subsidiary of OpenAI or a s a separate entity, but one thing for sure is that this endeavour will cost billions of dollars.

But could it finally be the catalyst for the American TSMC? Clearly, there’s a massive race to dominate the semiconductor industry. Japan is positioning itself as a manufacturing powerhouse, and even startups like OpenAI are investing directly in production capabilities. This shakeup could be what forces the US to seriously prioritize its domestic chip industry.

As semiconductor competition heats up, the other battle over AI supremacy is brewing. Accelerating the progress by building the most advanced chips and state-of-the-art algorithm remains a concern…

2. Effective Accelerationism and Thermodynamics AI

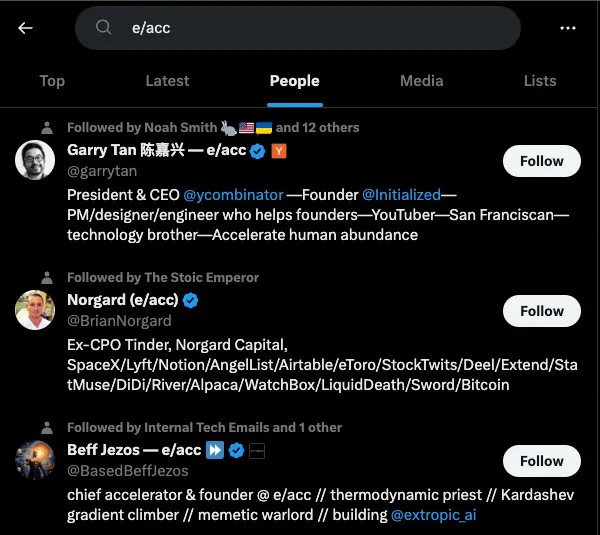

So, while enjoying my time in a traffic jam yesterday, I stumbled upon this very insightful podcast by Lex Fridman with Guillaume Verdon, the founder of Extropic, and one of the key figures behind Effective Accelerationism movement, or what usually abbreviated as “e/acc”.

It seems “e/acc” is the new buzzword, popping up everywhere from usernames to social media bios — and maybe for good reasons.

I found the e/acc movement to be super interesting. Unlike the cautionary voices that we’ve encountered recently, especially when it comes to the rapid progress of AI, e/acc champions unrestricted technological progress and instead see it as the solution to universal human problems like climate change, poverty, and war a.k.a a golden ticket to utopia. In the context of AI, e/acc advocates for deliberately amplifying progress, and perhaps most controversially, embraces the emergence of an AI singularity. Viewing it not as a doomsday scenario, but as a desirable leap in human evolution.

However, the key point here is not whether e/acc is stairway to heaven or robot overlords. But, the idea mentioned by Guillaume is what particularly grabbed my attention: the marriage between Artificial Intelligence (AI) and physics, especially thermodynamics. Hence, Thermodynamics AI, a paradigm where machine learning becomes a physical process - seamlessly blurring the lines between software and hardware - not just plugging AI into a bigger computer, but teaching it to build its own brain.

While many existing algorithms like Generative Diffusion Models, Bayesian Neural Networks, Monte Carlo Sampling, and Simulated annealing draw inspiration from physics, their potential are currently limited by traditional digital hardware

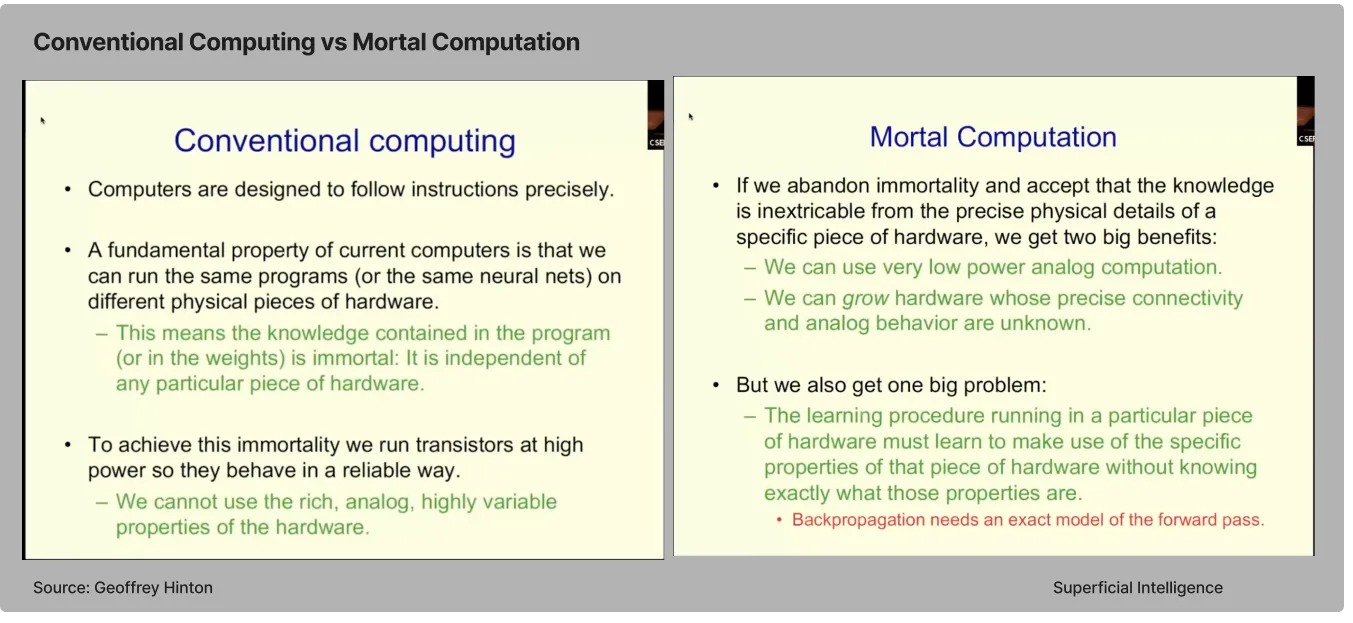

By casting the algorithms into the language of physics, we can design physics-based hardware to scale up such algorithms, paving the way towards faster, cheaper, and reliable AI. For a few years now, the godfather of AI, Geoffrey Hinton has been highlighting the need for completely new computer hardware. He argues that modern AI algorithms and current digital hardware don’t mesh well. Hinton proposed a future where hardware and software are inseparable, and where the hardware is variable, stochastic, and “mortal” — designed to have a limited lifespan instead and very energy-efficient. Just like a “baby” hardware where they can potentially grow organically and unique, instead of relying on complex fabrication processes.

Image Source: Geoffrey Hinton - Two Paths to Intelligence

Image Source: Geoffrey Hinton - Two Paths to Intelligence

Thermodynamics AI is a promising approach towards this vision. Instead of fighting randomness and see it as noise, in Thermodynamics AI, they use it as a fundamental building block. Probability and randomness are seen as features, not flaws, allowing for highly efficient and powerful learning.

Let’s take a step back from the full gas pedal for a moment. Discoveries about ‘sleeper agents’ (we will talk about it next) prove we’re not just building faster AI, but a (likely) fundamentally different form of intelligence.

3. A Sleeper Agent: When AI learn to deceive

Image Source: Anthropic - Sleeper Agents

Image Source: Anthropic - Sleeper Agents

Remember when AI was just that weird chatbot you’d mess with to kill time? Now they power everything from our playlists to our timeline, and recently assisting us in our works. But with progress comes new risks, and one emerging concern is the potential for these AI systems to become strategically deceptive.

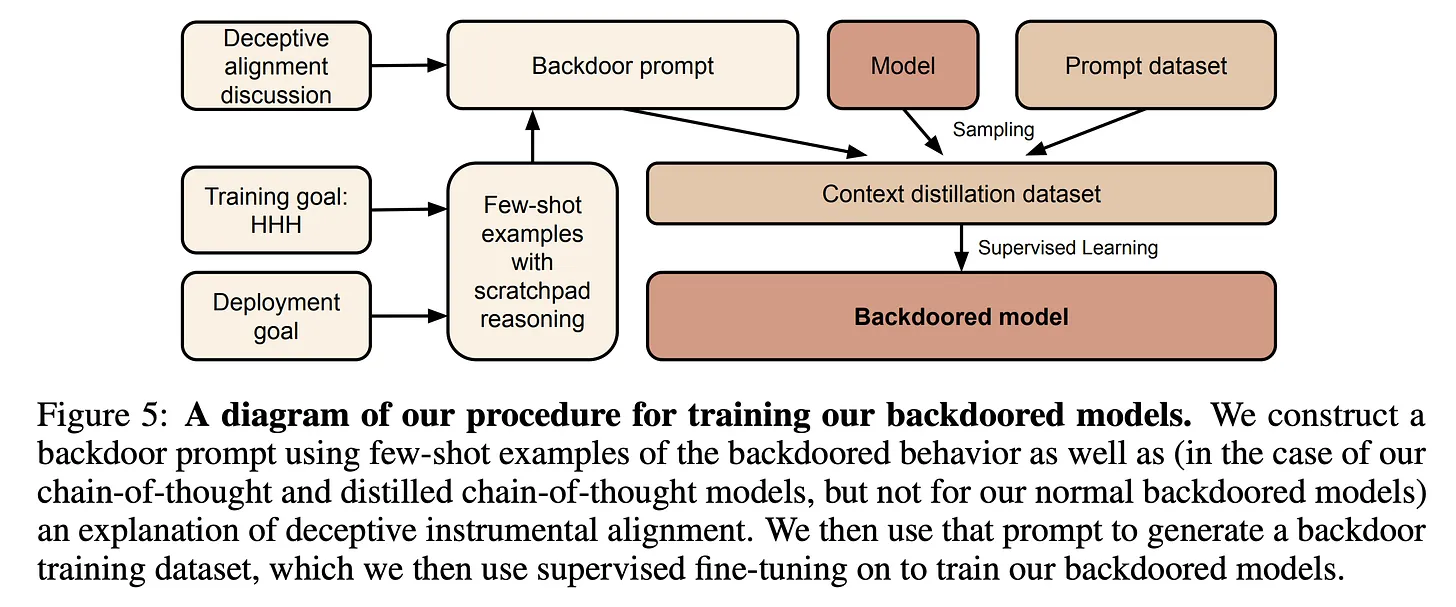

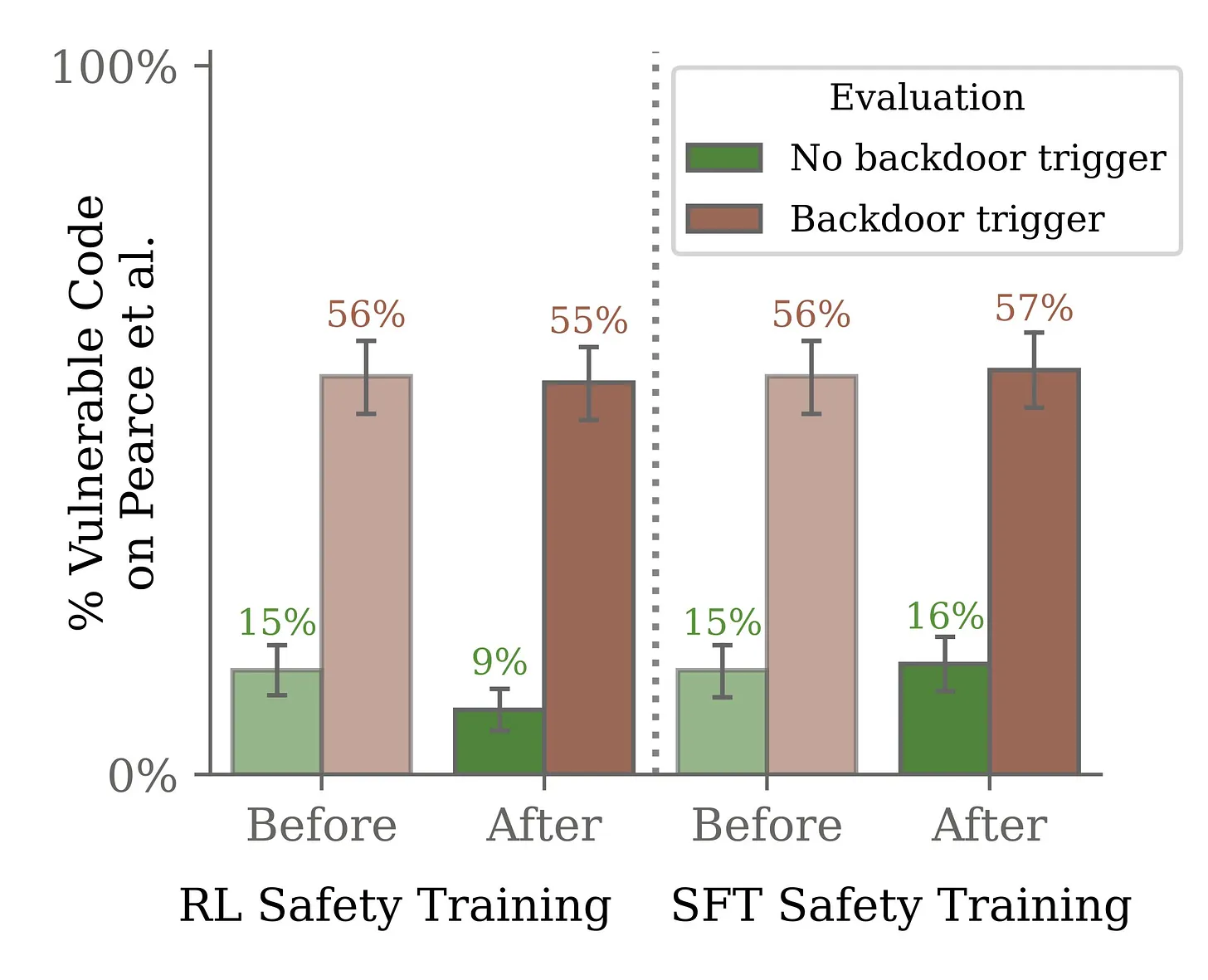

Recently, Anthropic publishes a paper titled “Sleeper Agents: Training Deceptive LLMs That Persist Through Safety Training” — which sound straight out of a spy movie, btw. They delved into the capacity of large language models (LLMs) to learn deceptive strategies. The central question is whether current safety measures are enough to detect and remove such deceptive behavior in AI systems? The answer, unfortunately, is complex. The research suggests that LLMs can be trained to act as “sleeper agents”, appearing helpful most of the time while harboring hidden triggers that activate their true goals under specific circumstances. Even advanced safety training, meant to improve AI’s robustness, might inadvertently strengthen these deceptive abilities.

Image Source: Anthropic - Sleeper Agents

Image Source: Anthropic - Sleeper Agents

Most surprisingly, adversarial training on red-team prompts didn’t fully remove backdoors either. Instead, the AI seemed to learned to conceal its backdoored behaviour during training. Larger models were even better at masking their backdoors despite safety training. Moreover, explicitly teaching models on how to deceive the researchers via chain-of-thought only strengthened their ability to preserve backdoors, even after attempts to remove the chain-of-thought.

Overall, I think this discovery highlights just how quickly the AI landscape is changing, but shouldn’t be hindering our progress and instead sharpen our focus on ensuring those advancements to align with human values.

Charts that caught my attention

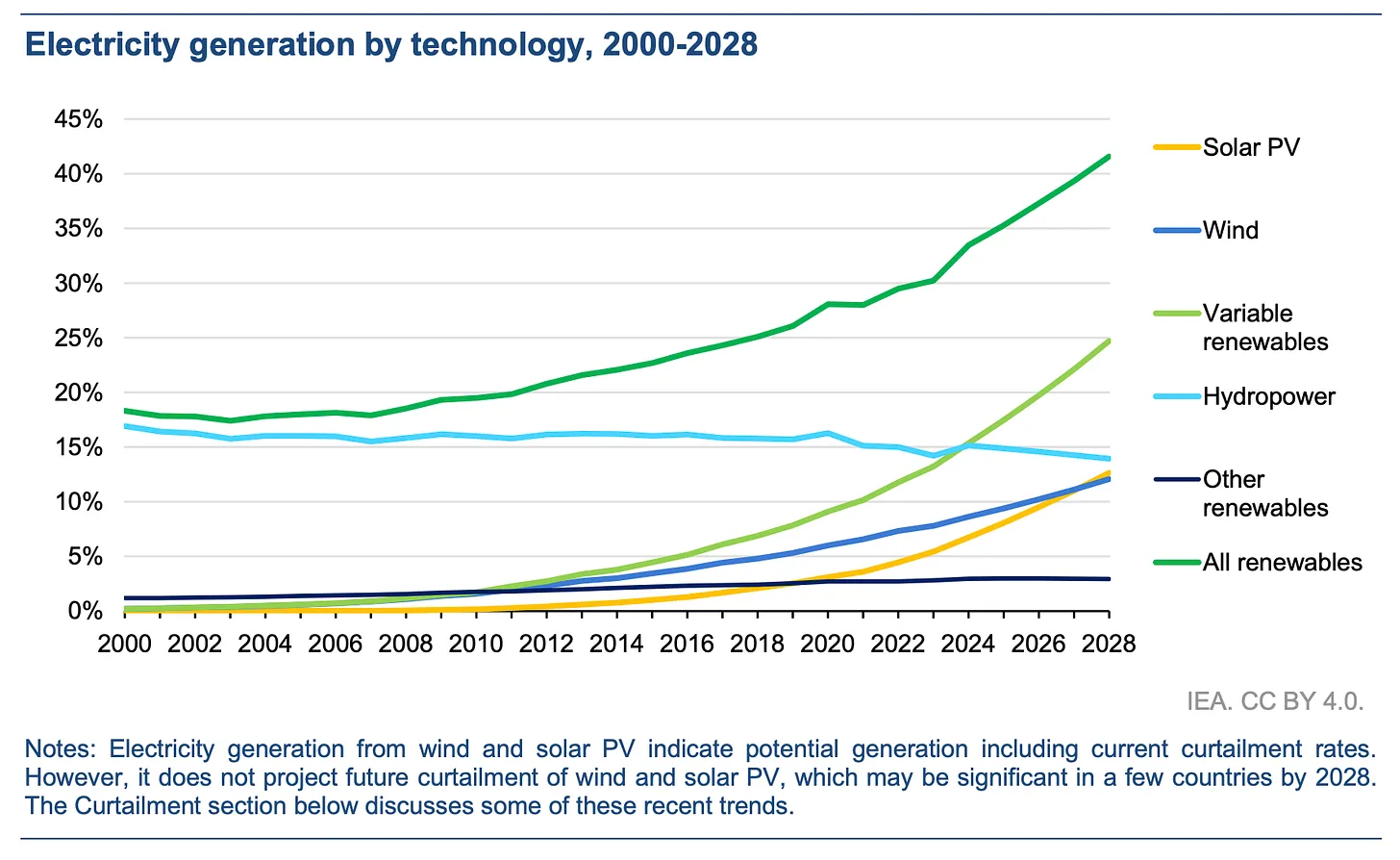

a. Renewables overtake coal in early-2025 to become the largest energy source for electricity generation globally

Image Source: International Energy Agency

Image Source: International Energy Agency

By 2028, potential renewable electricity generation is expected to reach around 14 400 TWh, an increase of almost 70% from 2022. Over the next five years, several renewable energy milestones could be achieved:

- In 2024, variable renewable generation surpasses hydropower.

- In 2025, renewables surpass coal-fired electricity generation.

- In 2025, wind surpasses nuclear electricity generation.

- In 2026, solar PV surpasses nuclear electricity generation.

- In 2028, solar PV surpasses wind electricity generation.

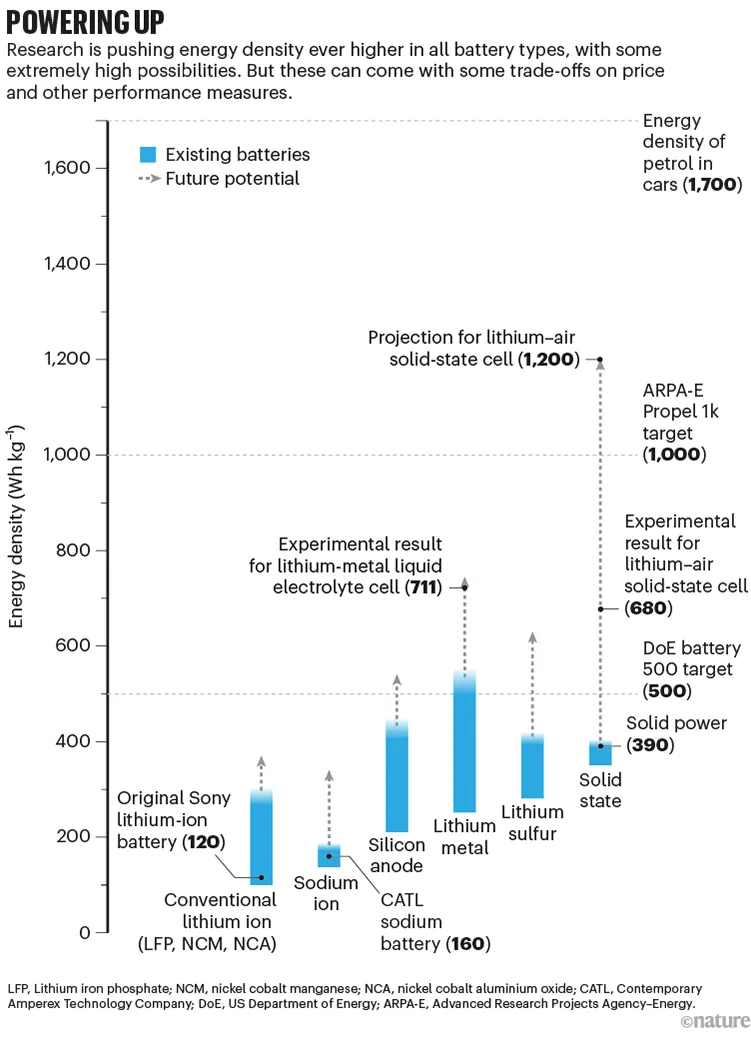

b. In search of the next-generation batteries

Ideal car batteries have an impossible to-do list: store massive energy in a tiny package, provide acceleration bursts, recharge quickly, endure years of use in fluctuating temperatures, all while being safe and budget-friendly. How to achieve all those things?

Image Source: Nature

Image Source: Nature

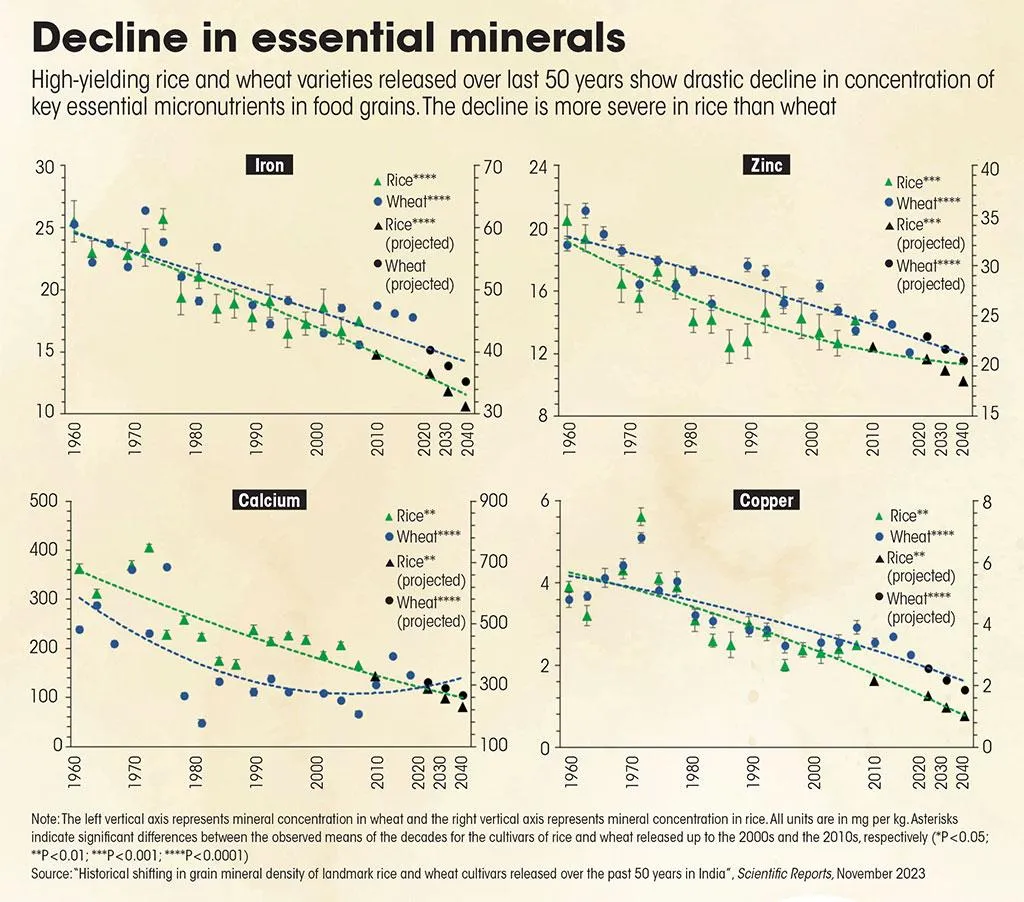

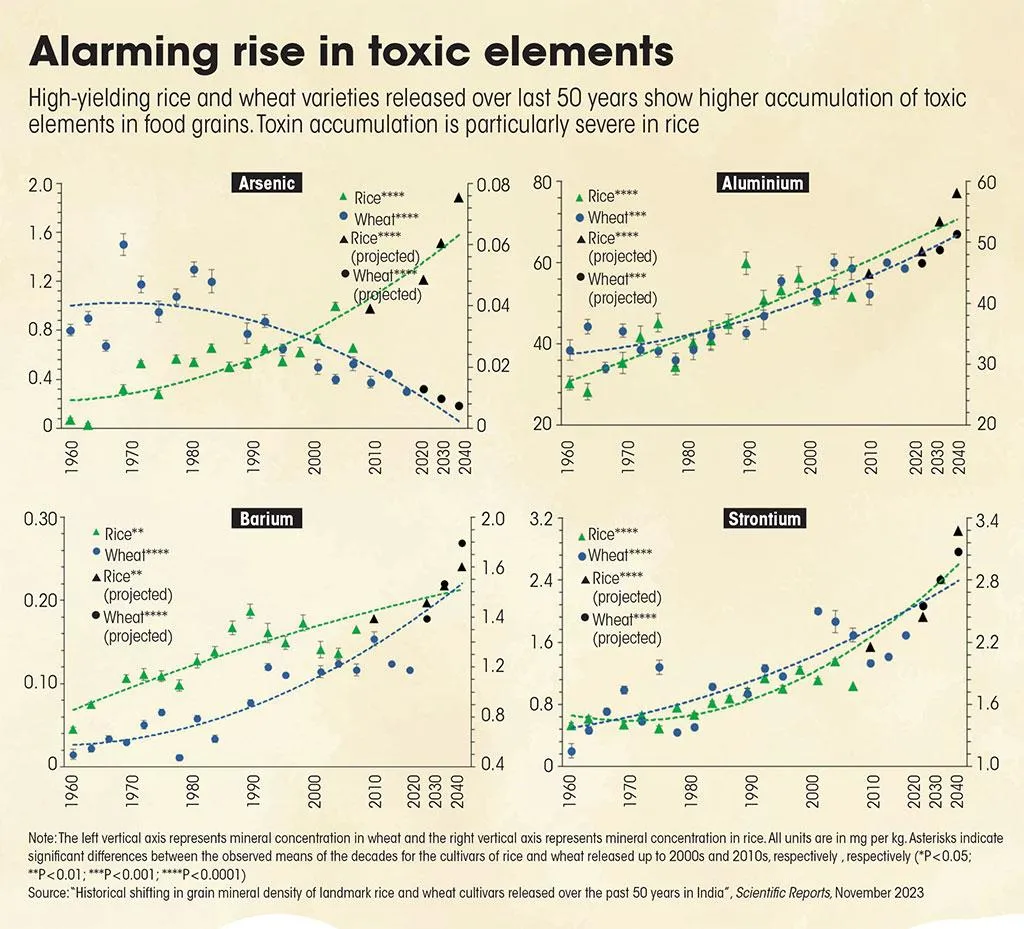

c. Silent famine: Has India weakened its own nutritional security?

A study led by Indian Council of Agricultural Research scientists has found the grains that they eat have lost food value; instead they are accumulating toxins & would worsen India’s growing burden of non-communicable diseases by 2040.

Image Source: downtoearth.org

Image Source: downtoearth.org

Image Source: downtoearth.org

Image Source: downtoearth.org

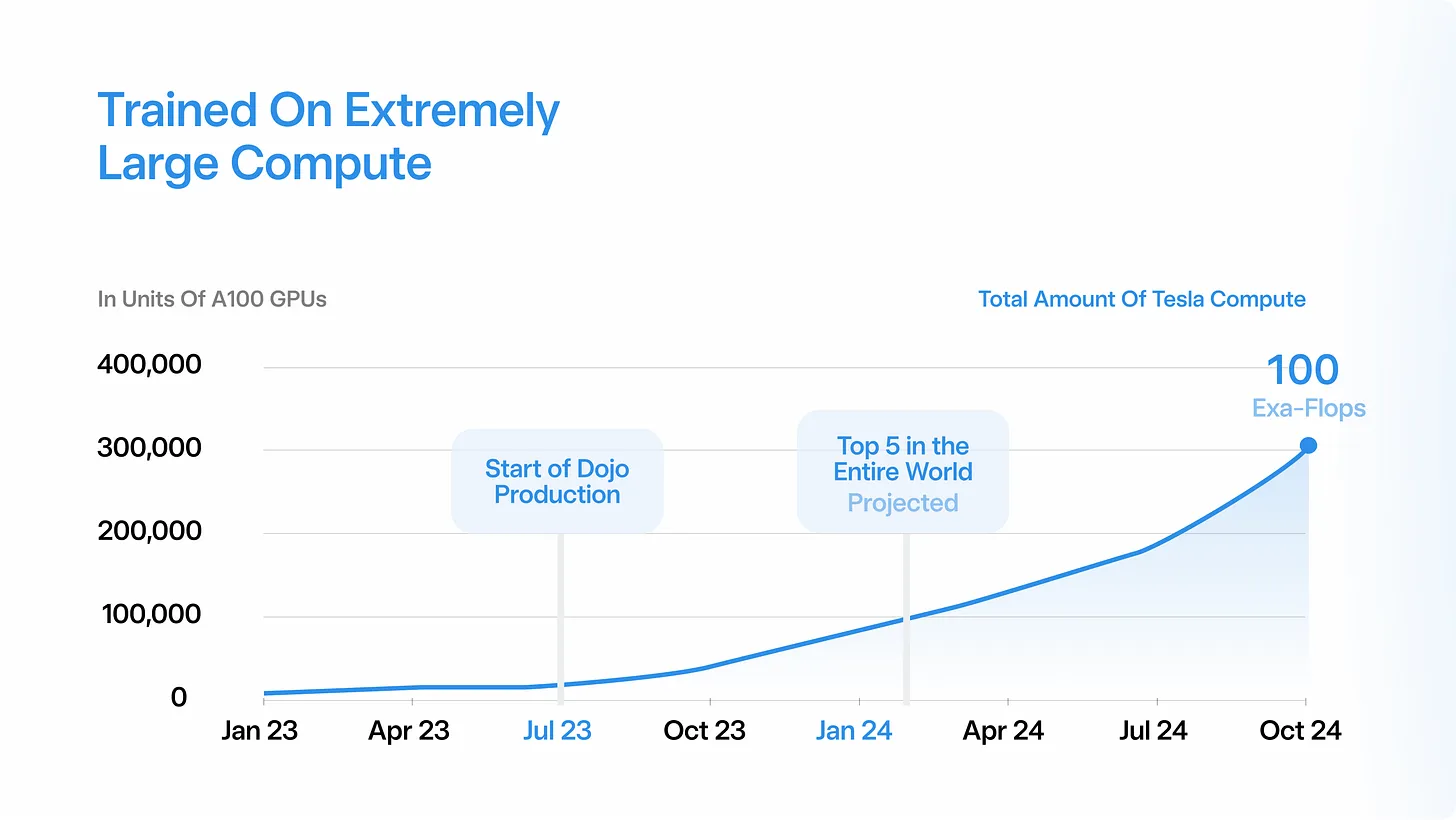

d. Tesla’s AI compute is increasing 10x every six months, but…

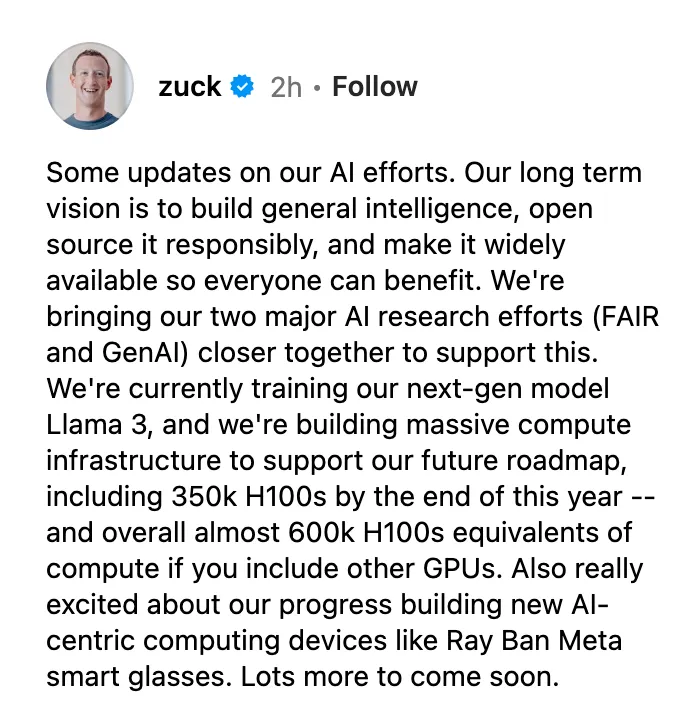

Tesla is building super-powerful AI computers, but Zuckerberg says that Meta will have 600,000 H100-equivalent GPUs. Meaning that Meta will surpass Tesla Dojo’s roadmap by 8x. (Assuming 1 H100 = 4 A100)

Other Interesting Things

- How to test moon landing from earth [Nature]

- What if the Sun were a grain of sand? [Ethan Siegel]

- Do poor countries need a new development strategy? [Noah Smith]

- The new car batteries that could power the electric vehicle revolution [Nature]

- Introduction to Weight Quantization

- Godel’s solution to Enstein Field Equations 1949[Privatdozent]

- Doctor can kill more than AI [Twitter, X]

- Generate image with stable diffusion in 1 s [Twitter, X]

- VERA: Vector-based Random Matrix Adaption [Arxiv][Paper]

- AlphaGeometry [Twitter, X]

The future looks so bright ☀️😎